In Proceedings of the IEEE international conference on computer vision, pp. Li P, Wang Q, Zuo W, Zhang L (2013) Log-Euclidean kernels for sparse representation and dictionary learning. IEEE Trans Pattern Anal Mach Intell 27(5):684–98 Lee KC, Ho J, Kriegman DJ (2005) Acquiring linear subspaces for face recognition under variable lighting. Kang Z, Pan H, Hoi SC, Xu Z (2019) Robust graph learning from noisy data. Kang Z, Lin Z, Zhu X, Xu W (2021) Structured graph learning for scalable subspace clustering: from single view to multiview. In Proceedings Eighth IEEE International Conference on computer Vision, pp. Kanatani KI (2001) Motion segmentation by subspace separation and model selection.

Pacific-Asia conference on knowledge discovery and data mining. Jing L, Ng MK, Xu J, Huang JZ (2005) Subspace clustering of text documents with feature weighting k-means algorithm. Jia H, Wang L, Song H, Mao Q, Ding S (2021) An efficient Nyström spectral clustering algorithm using incomplete Cholesky decomposition. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, pp 1–7 Goh A, Vidal R (2008) Clustering and dimensionality reduction on Riemannian manifolds. IEEE Trans Pattern Anal Mach Intell 35(11):2765–81įavaro P, Vidal R, Ravichandran A (2011) A closed form solution to robust subspace estimation and clustering.

J Mach Learn Res 14(1):2487–517Įlhamifar E, Vidal R (2013) Sparse subspace clustering: Algorithm, theory, and applications. Proc Natl Acad Sci 100:5591–5596ĭyer EL, Sankaranarayanan AC, Baraniuk RG (2013) Greedy feature selection for subspace clustering. SIAM J Imag Sci 9(4):1582–618ĭonoho D (2003) Hessian eigenmaps: new tools for nonlinear dimensionality reduction. IEEE Signal Process Lett 25(2):164–8Ĭhoi GP, Ho KT, Lui LM (2016) Spherical conformal parameterization of genus-0 point clouds for meshing. BirkhäuserĬasselman B (2014) Stereographic projection, Feature columnĬhen Y, Li G, Gu Y (2017) Active orthogonal matching pursuit for sparse subspace clustering. Adv Neural Inform Process Syst 14:585–591Ĭarmo MP (1992) Riemannian Geom. PMLR, pp 561–568īelkin M, Niyogi P (2002) Laplacian eigenmaps and spectral techniques for embedding and clustering. In: International Conference on Machine Learning. Pattern Recogn 118:108041īai L, Liang J (2020) Sparse subspace clustering with entropy-norm. Chapman & Hall CRC Data mining and Knowledge Discovery series, Londraīaek S, Yoon G, Song J, Yoon SM (2021) Deep self-representative subspace clustering network.

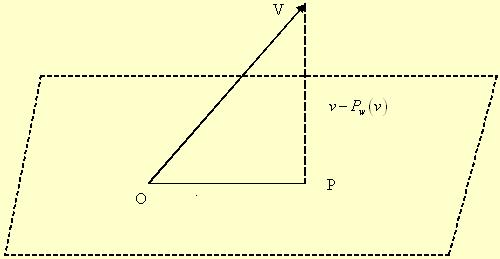

Finally, we provide the experimental analysis to compare the efficiency of stereographic sparse subspace clustering with \(l_1\)-norm, Entropy norm, and kernel on several data sets.Īggarwal CC, Reddy CK (2014) Data clustering. The proposed method finds an appropriate distance instead of the Euclidean distance for the Kernel SSC algorithm and the SSC algorithm with Entropy norm. The key idea is to provide a novel feature space by conformal mapping the original intrinsic manifolds with unknown structures to n-spheres such that angles and sparse similarities of the original manifold data are preserved. This paper extends Sparse Subspace Clustering with \(l_1\)-norm and Entropy norm to cluster data points that lie in submanifolds of an unknown manifold. Unfortunately, real-world datasets usually reside on a special manifold where linear geometry reduces the efficiency of clustering methods.

However, SSC methods presume that data points are embedded in a linear space and use the linear structure to find the sparse representation of data. Sparse Subspace Clustering (SSC) methods based on variant norms have attracted significant attention due to their empirical success.

0 kommentar(er)

0 kommentar(er)